Trusted by hundreds of enterprises and fast-growing companies

AI-driven, unified control Platform

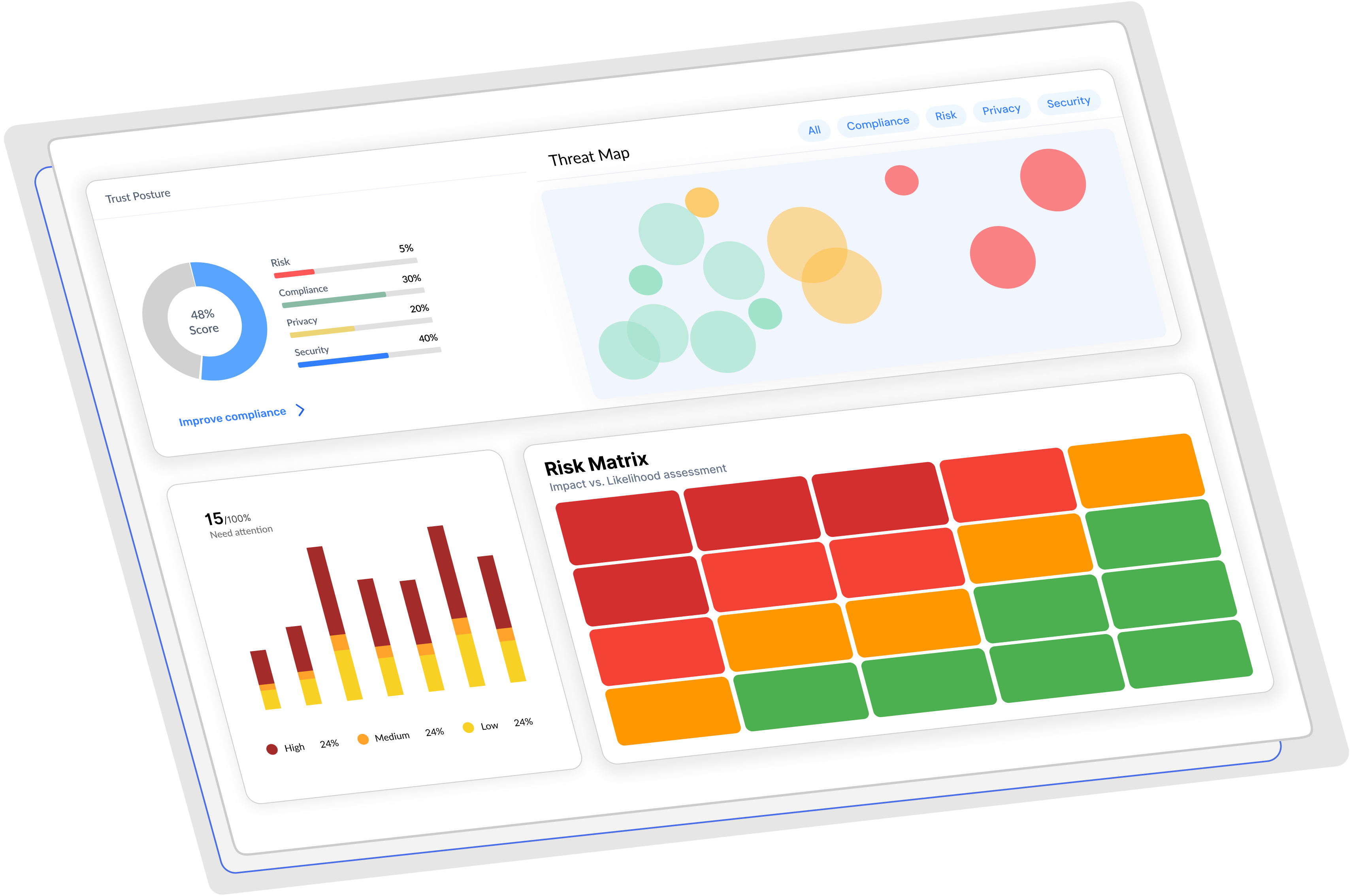

Cybervergent gives you instant visibility, automated governance, and continuous assurance across cloud-native and hybrid setups. Move fast, stay compliant, and build security.

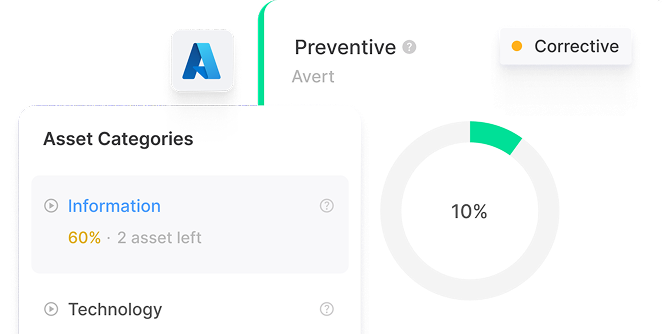

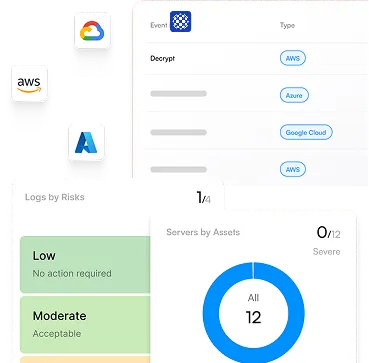

Real-time Posture Visibility

Continuous monitoring and visibility across cloud and on-premise environments with AI-powered threat detection

- Infrastructure Monitoring

- Automated Threat Detection

- Real-time Alerting

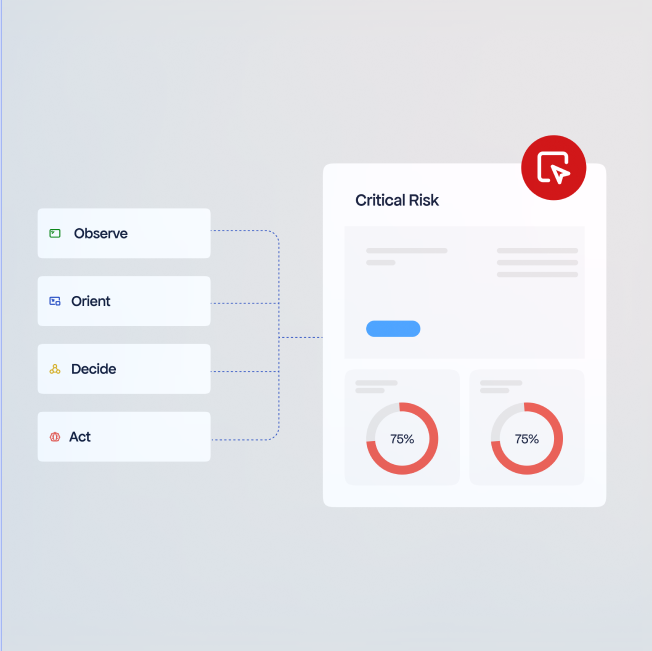

Rapid Response

Accelerate incident response with AI-powered recommendations and automated remediation workflows

- AI Recommendations

- Playbooks

- Faster Remediation

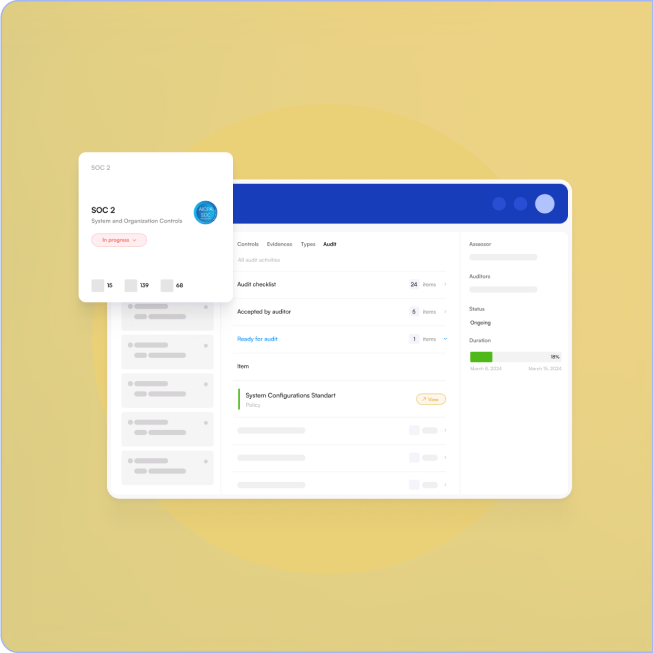

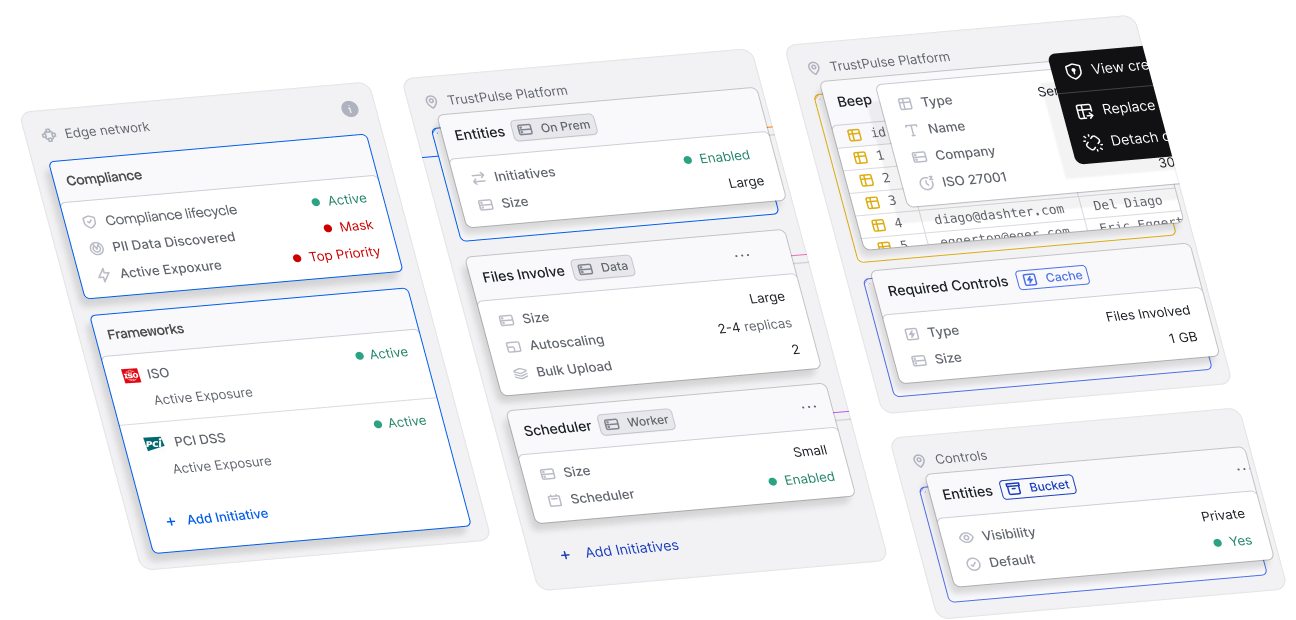

Compliance Automation

Streamline compliance workflows with automated reporting and continuous compliance monitoring

- Automated Reports

- Continuous Monitoring

- Policy Enforcement

Artificial Intelligence is built into the fundamental architecture and operations of the

platform. AI algorithms are core to how Cybervergent collects, processes, analyzes,

and acts on data. This enables the following:

Data security observability

Cybervergent provides you with a clear and concise understanding of your entire digital environment's security, risk, and compliance status. The platform aggregates data from various connected sources and assets to provide this comprehensive overview.

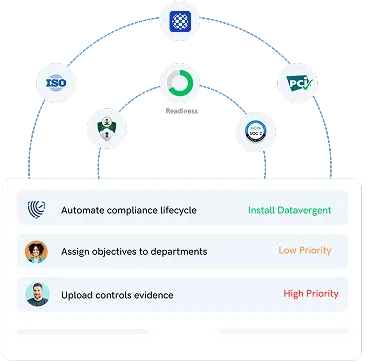

Prove trust and win deals

Cybervergent provides a comprehensive trust report to advertise and share compliance documentation, making enterprise reviews a breeze and accelerating your sales cycle.

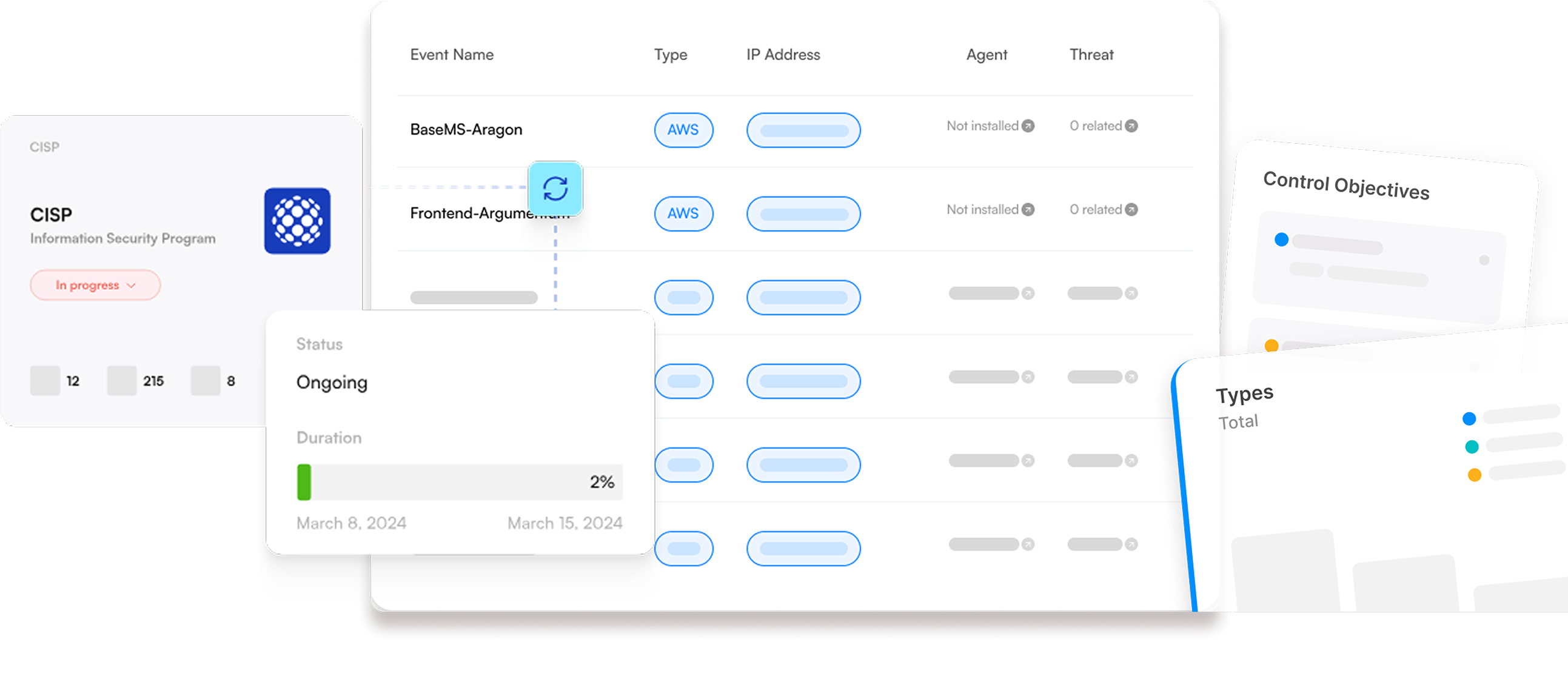

AI-Native threat detection with automated response

Advanced machine learning algorithms continuously analyze your environment to detect anomalies, threats, and security incidents with precision and speed.

Comprehensive risk analysis with executive reporting

Get detailed risk assessments, vulnerability analysis, and executive-level reporting that provides clear insights into your security posture and business impact.

A comprehensive approach to unlocking

digital trust!

Join industry-leading professionals and hundreds of companies using Cybervergent to establish digital trust via context-aware automation across governance, compliance, risk, and data security.

Across cloud, on-premise, and hybrid.

Unified Point of Control

Cybervergent ensures that security and governance policies are applied uniformly regardless of where the data or workload resides.

Posture Management

Continuous monitoring of configuration drift, vulnerabilities, and non-compliances.

Workload Protection

Protecting applications and data on assets in both cloud and on-premise environments.

Compliance & Governance

This establish, maintain, and enforce policies, approval workflows and frameworks that ensure the integrity, reliability, and ethical use of digital systems and data.

Data Security

Full observability and preventing data from unauthorized access, use, disclosure, disruption, modification, or destruction.

Risk

Identifying, assessing, and mitigating potential threats and vulnerabilities before they materialize.

Your Path to Stronger

Compliance Starts Here

Discover how Cybervergent helps you secure data, simplify compliance, and improve business resilience.

Request a demo